Why the human still matters in the development and validation of the next generation of ADAS

The advent of ADAS features has unleashed significant challenges for the already overstretched test and engineering departments within the automotive industry. The pursuit of establishing trust between the human occupant and the ADAS-driven car ‘brain’ has become a paramount objective for OEMs and Tier Ones. However, the pivotal question arises when ADAS systems and sensors face limitations in accurately interpreting the true road conditions. It’s this quandary that’s prompting a shift in priorities for automotive engineers, with Driver in the Loop (DiL) simulation increasingly dominating their wish lists.

All levels of ADAS require complex validation

It’s important to note that the testing and validation of ADAS features is not confined to the higher and more complex levels of autonomy. The mandatory introduction of Lane Keep Assist and Autonomous Emergency Braking in 2022 for even the most basic new cars and light vans on sale in the EU, meant every all-new car has some sensor interaction with the brakes or steering. More ambitious endeavours, such as NIO’s testing of its cars to leave the highway, drive to a swap station, swap the battery and return, autonomously, are already being trialled in China. And if that’s not enough, Euro NCAP now grades the performance of ADAS features, adding a further layer of pressure from the marketing groups that use the scores to sell the cars.

With so much talk of autonomy in our industry, the obvious answer might be to consider that the human is redundant and the testing and validation can be done offline. The reality is that none of these vehicles will operate on their own and their behaviour has to be safe, comfortable and confidence-inspiring for their human occupants. Ultimately and before we even consider the impact (or hopefully not!) of other vehicles, it’s the behaviours and responses of humans that remain central to the acceptance of any vehicle equipped with ADAS.

Having a safe, repeatable and cost-effective means of capturing this behaviour is leading more OEMs to wholly embrace (DIL) simulation. This unique form of simulation actively invites the varied and sometimes unpredictable actions of human beings, but within the variable-yet-controlled conditions of the virtual world. It allows an enormous palette of design iterations and edge cases to be assessed, even when no physical hardware exists.

DIL developments

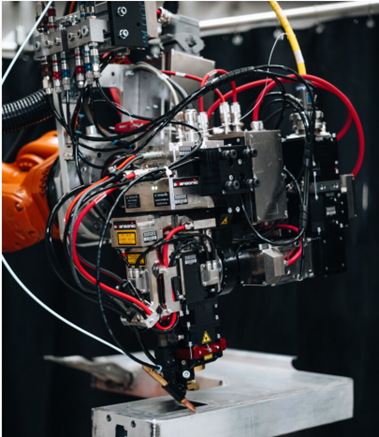

In just the last five years, significant advances in DIL technologies such as motion control, ability to integrate wide ranging hardware and software systems, crisper graphics (4K rendering and full 360° visual immersion now increasingly the standard) and real-time performance capabilities have moved simulation to the next level of immersivity for both the human participants and the ADAS systems being tested. Today, complex effects such as fog, water on sensors and lens flare can all be accurately modelled in software, repeatably and safely in a controlled laboratory environment. Now engineers can aim a physical camera at the simulator’s projection screen to pick up its own video feed. Likewise, LiDAR emulators can be used to feed real-time information to actual sensor hardware. Connections over networks such as CAN and FlexRay can be incorporated into the hardware on test. It all adds up to a truly authentic environment for electronics modules to operate and be validated within, and most importantly: it’s a virtual environment that provides a simultaneous experience and participation domain for real people.

Motion and emotion

High-fidelity, high-dynamic, human-centric machinery such as Ansible Motion’s patented Stratiform Motion System (SMS) offers the ability to conduct the majority of dynamic manoeuvres, with sustained G-forces and high frequency content for ground vehicle applications. Not only do such (DIL) simulators give a true sense of the physical behaviour of the vehicle in its autonomous or assisted mode, but it can also be used to carefully analyse the crucial point of human-to-autonomous handover. For semi-autonomous (SAE Level 3) systems, the moment the driver passes control over to the vehicle is likely to be a psychologically significant one. Even more crucial will be the point where control is returned to the driver. Here, the vehicle is responsible for clearly communicating the upcoming handover while also ensuring that the driver is ready to take control. Knowing at what point to intervene in an unfolding scenario is key to striking the right balance between driver control and active safety – something that can be tested in a DIL simulator with a range of driver levels and abilities, not just experienced evaluators.

Overly intrusive assistance systems, as is the frequently cited case with some Blind Spot Monitoring systems, might inadvertently encourage drivers to switch off or ignore the system. This highlights the importance of ensuring ADAS interventions align with human expectations and behaviours. At worst, an unexpected intervention from a system such as AEB can be highly disconcerting or potentially even dangerous if there are other vehicles following close behind.

User interface

Engineering-class DIL simulators, used in place of real-world testing, prove advantageous in pre-emptively addressing scenarios such as loss of traction during high-speed cornering, identifying and mitigating the risk of driver over-correction or conflicting with system inputs. Such scenarios are likely to prompt a reaction from the human driver (albeit, not necessarily the correct one). As such, it’s vital to ensure that the ADAS intervention won’t cause the driver to over-correct or unintentionally oppose the inputs of the system. It’s another compelling advantage to using a fully dynamic simulator in place of real-world testing for the bulk of the development work. DIL won’t ever replace the benefits of pounding around a test track or proving ground, but it can do the heavy lifting and get to the point where systems and the driving experience can be refined in the latter stages of development.

User experience

Considering the user experience is also vital when considering ADAS development. An accurate and immersive simulation environment will allow engineers to explore how customers inside the vehicle perceive ADAS interventions and related attributes. Does the vehicle’s behaviour instil confidence? Is it comfortable or even involving? Does it convey the brand’s essence? Answers to these questions can be found faster using a real person in an engineering-class DIL simulator.

Another point to consider is the level of confidence that an ADAS function instils in the vehicle’s occupants. Humans tend to look for cues in the vehicle’s behaviour that indicate it’s being driven correctly. Like slowing down in good time on the approach to a junction, or perhaps something far more subtle such as positioning a car for an upcoming turn, or a slight pause upon sensing that the vehicle in front might be about to change lanes. DIL simulators are being used to determine if emphasising these cues might make passengers feel more at ease.

For so many reasons, when it comes to a task as complex as ADAS development, the human aspect is simply too important to ignore.

Salman Safdar, DIL Expert at Ansible Motion