Robert Baribault, Ph.D. Principal Systems Architect, LeddarTech

Introduction

Electric vehicles (EV) are leading the way towards safer transportation and cleaner environments. Deployment of EVs is accelerating as novel applications become even more rapidly available. Driverless transport or Autonomous Driving (AD) applications which include robotaxis, delivery vehicles, cleaning units, are becoming the norm.

One of the main goals of AD is to maximize fast service in a targeted location with minimum impact on everyday life and little human interaction. In all AD applications, detecting and classifying objects in the surroundings is required and can be done using LiDARs as one of the sensors.

Automated Driving and Driving Functions

A driverless vehicle has the same requirements as human-driven ones. They have to stay in the driving lane or change lanes, accelerate, brake as well as perform other functions. The AD behavior, as seen from the sidewalk, should be similar to vehicles with drivers, even though the absence of a person is quite obvious. To allow safe driving, an AD EV requires the same functions as assigned to a driver to position itself within its environment as it moves along its itinerary and predicts the safest route to take.

Figure 1 – Driverless car high-level functions

Figure 1 shows a simplified view of the driver’s functions, namely Sensing, Analyzing, and Reacting.

Sensing is the capacity to determine what is outside the vehicle, where these “objects” are relative to the EV. Analyzing is using the sensing data and the EV’s known reacting capacity to determine the correct path prediction to direct the EV where it needs to go safely while protecting the internal load and the integrity of the vehicle. Reacting corresponds to braking, accelerating, and turning the wheels or, simply put, driving. The three functions interact continuously to drive the EV, Sensing, Analyzing, and Reacting do not have the same complexity but share the same basic requirements: they all need to be swift, precise, and reliable.

Sensing Technologies and Key Parameters

Sensing is crucial for AD. Reacting depends on Analyzing, which depends on the correct knowledge of the environment, near and far, in all directions. Common and reliable AD sensors are cameras, radars, and solid-state LiDARs. The main specifications are object distance and angular position relative to the EV.

Cameras have the highest angular resolution and can detect color and useful attributes to detect road signs and streetlights, for example. Cameras do not provide intrinsic time-based object distance information. Radars and LiDARs are both self-contained distance measurement devices due to the emission and reception of electromagnetic light or radio waves. LiDARs hold the advantage in range accuracy and field-of-view resolution, while radars perform better in inclement weather and have a more extended daytime range. Both LiDARs and radars operate very well at night but offer less angular resolution than cameras. Since cameras rely on outdoor light, they operate better in the daytime than at night. Finally, both LiDARs and cameras can offer an ultra-wide field of view, which is more difficult with radars.

In all cases, it is desirable to have detection all around the vehicle, as shown in Figure 2, with a 180° sensor deployed on each side of an AD EV.

The maximum detection distance, or range, should cover the maximum braking distance, as defined by the vehicle’s maximum speed, the reaction time, and the maximum deceleration. As an example, an AD vehicle travelling at a speed of 40 km/h has a braking distance of 24 meters when the reaction time is half a second, and the deceleration is 3.5 m/s². Other EV types without passengers may have a higher deceleration rate, in which case the braking distance will be shorter.

Sensing Distance, Time of Flight, Distance Resolution, and Full Waveform

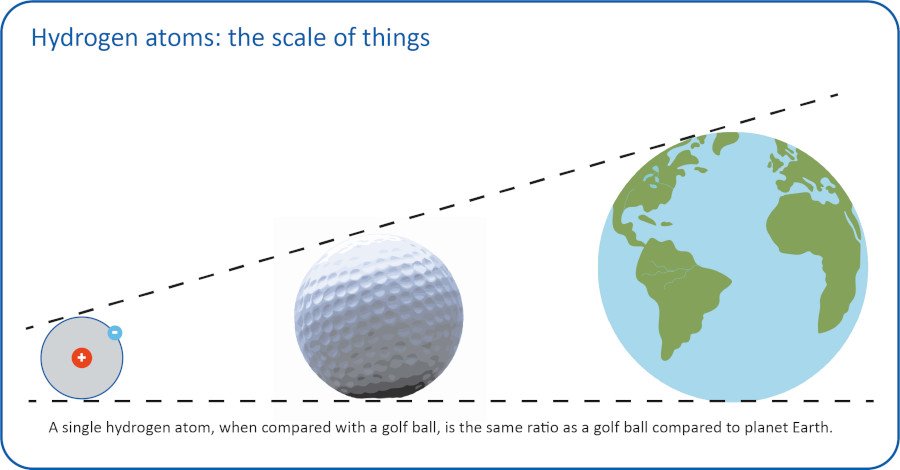

LiDARs and radars use time-of-flight (ToF) measurements to determine the position of objects. They are self-contained distance and position measurement sensors. ToF is based on echoes of short-duration pulses. The sensor emits a brief, high-intensity pulse and measures the time it takes to receive a reflection from an external object. The farther an object is from the sensor, the longer the delay is between the emission and the reception of the pulse. The main difference between radars and LiDARs is the emitted pulse duration. A LiDAR can emit a pulse duration in the nanoseconds scale, shorter than radar pulses, with a higher position precision.

ToF is applied to each pixel in the field of view. LiDARs use a combination of multi-channel lasers and photodiodes to increase the number of pixels in the scene. Most interestingly for the EV path prediction, ToF LiDAR provides the distance of the two objects in the same pixel with high precision.

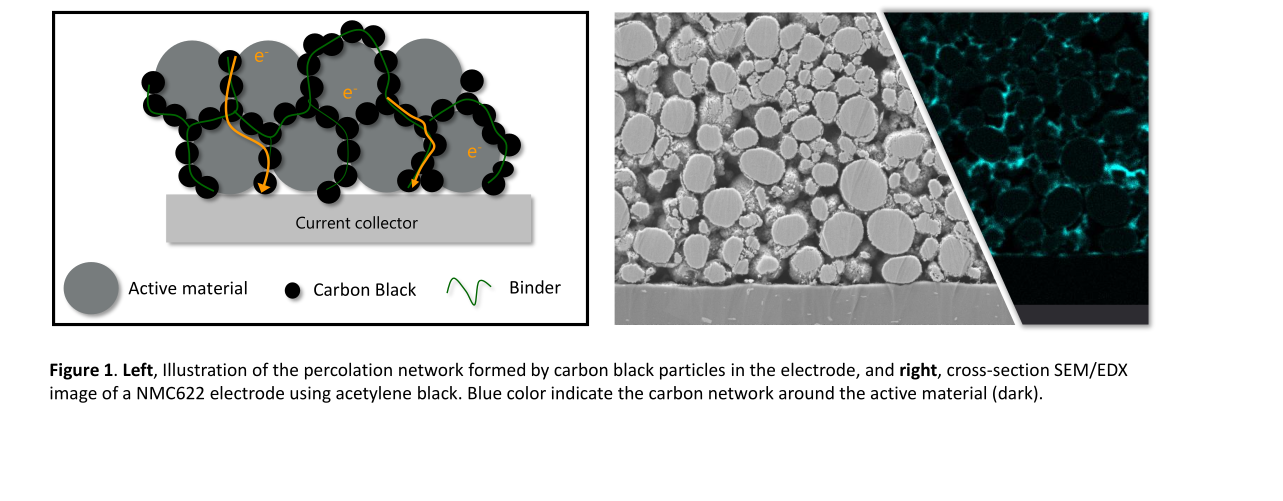

Figure 3 shows how multiple pulses can be received in the same pixel. The first small detected pulse corresponds to a low-reflectivity or semi-transparent object. The light transmitted “through” the first object propagates towards the second object, which reflects light back to the LiDAR, creating a second peak in the detection data. Pulse shape information can also be used to determine the object type, as may be inferred from Figure 4. In this figure, we see that multiple reflection types lead to different shapes in the detection data. The size and shape of objects can then be inferred to help object classification.

.

Sensing Singular Resolution and Object Classification

Determining the nature of objects outside the vehicle is critical to predicting the correct EV path and sending the correct instructions to Reacting. Our experience of object classification is based on size evaluation, color, speed and other factors. We chiefly use angular resolution for this purpose, but our eyes have so many functions that they should not be the reference for AD.

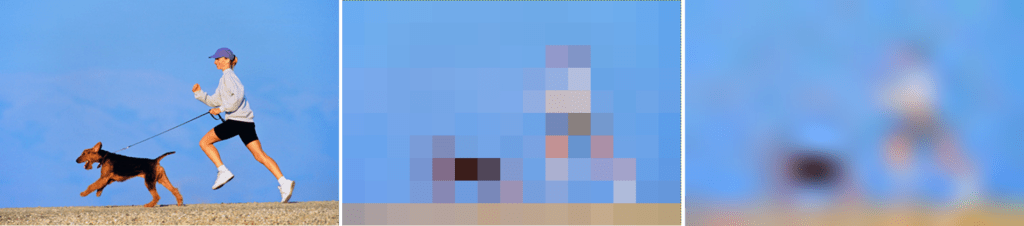

The optimal sensor resolution for object classification is considerably lower than human vision. Figure 5 shows an example of different simulated resolutions for the same object at the same distance. In the leftmost image, we immediately recognize a woman running with a dog. There are details such as the ponytail, the leash, the baseball cap that are significant to human perception but irrelevant to AD. This is due to a relatively high resolution of 480 x 320 pixels that creates a very clear image, which would seem like a clear requirement for AD. The central image has only 15 x 10 pixels and does not provide as much information. We distinctly see two objects, one taller and one shorter, with a good idea of the horizontal size of the objects. Based on this image, we can predict that the AD EV needs to react and steer the EV away from these objects. It is apparent that the position uncertainty from the pixel size will make the EV move slightly more than minimum to avoid the objects. This margin needs to be embedded in the Analyzing function to reduce the risk of accidents. Over a few acquisition frames, the Analyzing function will determine that it is slow-moving and will predict a safe path. The rightmost image of Figure 5 shows the same data as the central image, enhanced with some interpolation to increase the number of pixels done before the AF to increase classification probability. The content of the rightmost image is much clearer, and we can distinguish a human and a small animal. We may conclude that a lower resolution is sufficient for AD applications.

Another example of the combination of the full waveform data and the lower resolution for object classification is shown in Figure 6. In this use case, the truck behind the cyclist is clearly seen and the resolution is sufficient for classification of both objects. The full waveform data of Figure 3 is extracted from this scene. The first peak corresponds to the bicycle, while the second peak is light reflected from the truck.

Classification and Analysis Function Training

For object classification, the Analysis function is based on neural networks that require training. Known objects tagged in images are used to teach the AI the ability to classify objects. LiDARs can provide the distance of detections and help build a three-dimensional map that facilitates the data sorting for classification.

Figure 7 shows classification results using the raw data from a LeddarTM Pixell LiDAR. The resolution of a few pixels per object is sufficient for correct identification.

AI Learning can start with simulations, but the final training needs to come from real-life situations. The next step towards the final implementation is prototyping and data collection on a moving vehicle.

Conclusion

Automated-driving Electric Vehicles are the short-, medium-, and long-term future of transportation. Emerging applications are being developed to facilitate services such as public transport, goods delivery, and other specialized applications. The ability to position and classify objects in the vehicle surroundings is key to path prediction and increased safety. AD uses sensors and LiDAR, as one of the sensors, provides intrinsic distance measurement and offer sufficient angular resolution for object detection and classification. The current successful demonstrations of AD with EVs are critical steppingstones opening mass volume markets, and LiDARs are a critical part of the solution.